The Essential Data Annotation Tech Assessment Answers Every Data Scientist Needs

As a data scientist, I know all too well the challenges of navigating the ever-evolving world of machine learning and AI. And at the heart of it all lies the critical task of data annotation – the process of labeling and tagging data to train our models to perform their magic.

It’s a daunting task, I’ll admit. With so many tools and techniques to choose from, it can feel like trying to find a needle in a haystack. But fear not, my fellow data enthusiasts! In this article, I’m going to share with you the essential data annotation tech assessment answers that have kept me up at night, empowering you to make informed decisions and take your data annotation game to the next level.

Introduction: Your Guide to Navigating the Data Annotation Landscape

Data annotation types

Data annotation types

Let’s start with the basics. Data annotation is the lifeblood of machine learning, providing the fuel for our algorithms to learn and grow. Whether we’re talking about images, text, audio, or video, getting that data properly labeled and tagged is the key to unlocking accurate and efficient models.

And let me tell you, the quality of that annotated data is no joke. It’s the foundation upon which our entire machine learning strategy is built. Get it wrong, and your models will be about as useful as a chocolate teapot. But get it right, and watch as your creations start to shine like the North Star.

Understanding the Data Annotation Landscape

What is data annotation?

What is data annotation?

As a data scientist, it is important to understand the data annotation landscape. Data annotation is the process of labeling and tagging data to train machine learning models. It is crucial for providing accurate and efficient models in various domains such as images, text, audio, and video. Properly labeled and tagged data is the foundation of a successful machine learning strategy.

Key Considerations for Data Annotation Tech Assessment Answers

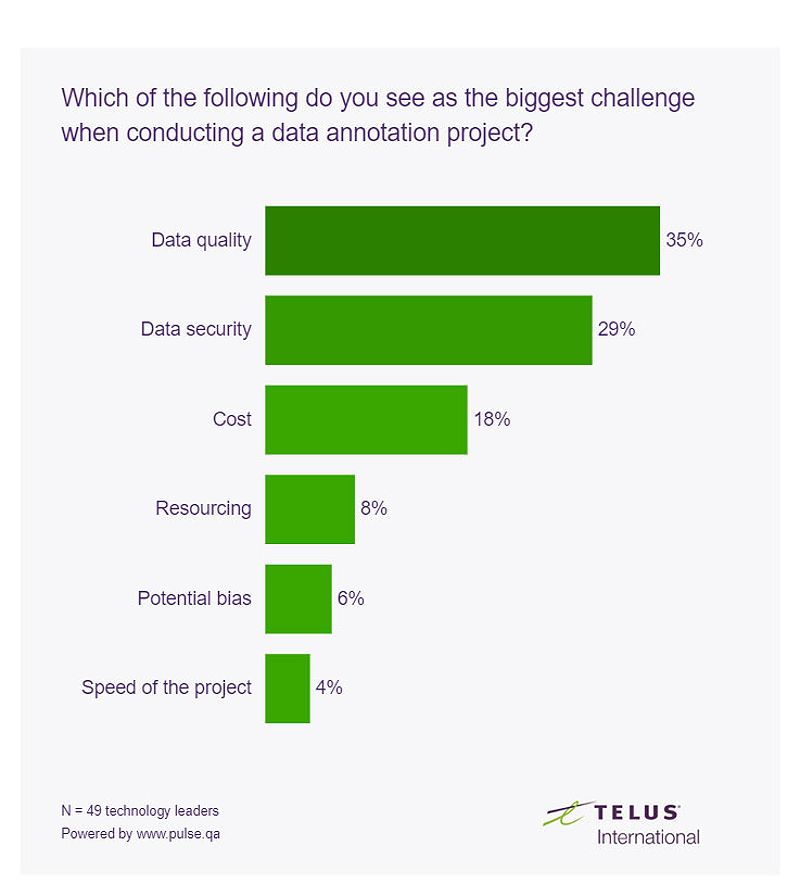

Chart depicting different challenges in data annotation

Chart depicting different challenges in data annotation

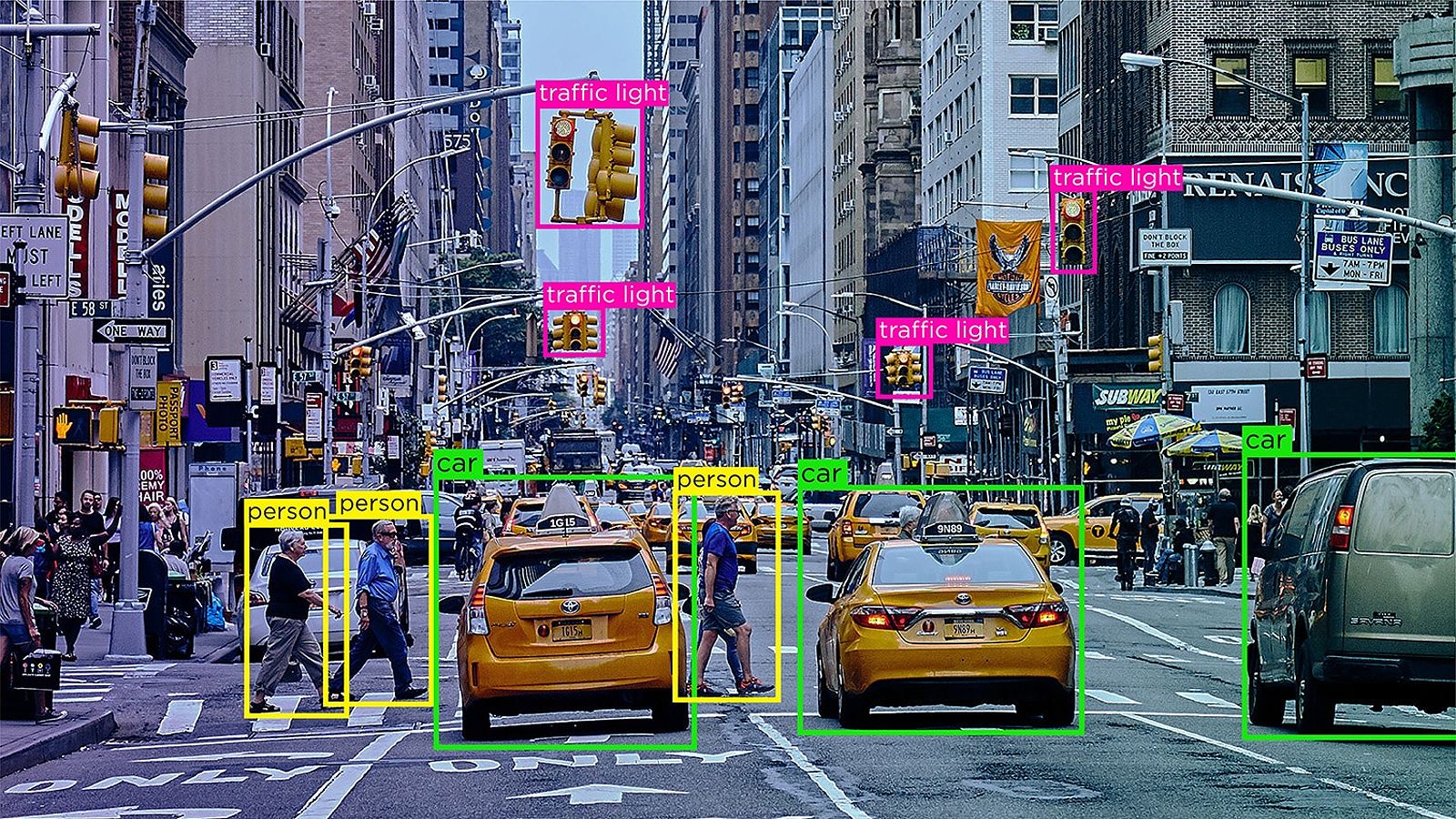

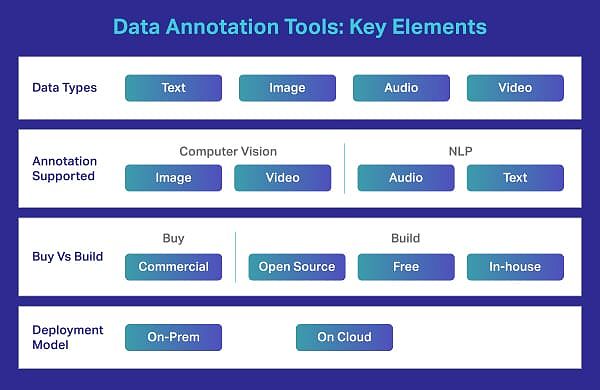

When evaluating data annotation tools, there are several critical factors to consider. The type of data being worked with will determine the appropriate annotation techniques. For images, techniques such as bounding boxes, segmentation, or keypoint detection may be used. Text data may require semantic annotation, intent labeling, or entity recognition. Audio and video data present additional challenges, such as transcription, speaker identification, and event tagging.

Scalability and performance are also important considerations. As data volumes continue to increase, it is essential to choose tools that can handle the load and process annotations efficiently. Additionally, data quality and validation are crucial. Look for platforms that offer robust quality control features, such as annotation review, consensus models, and quality checks.

Evaluating Data Annotation Tools

Data labeling/annotation tool

Data labeling/annotation tool

When assessing data annotation tools, there are several key features to consider. The annotation interface should be intuitive and user-friendly, enabling a smooth and seamless workflow. The tool should also be capable of handling the specific needs of the project, whether that involves bounding boxes, segmentation, or other techniques. Quality control and management features are also important, ensuring that annotated data is accurate and consistent.

Addressing Common Data Annotation Challenges

Data annotation comes with its own set of challenges. Data bias and fairness are important considerations, as annotations can reflect the biases of the human labelers. Strategies such as using diverse annotation teams and employing bias detection techniques can help mitigate these issues. Data privacy and security are also crucial, particularly when working with sensitive information. Compliance with regulations and implementing appropriate data protection measures are essential. Additionally, evaluating the cost and resource allocation of different annotation solutions can help optimize budgets and maximize return on investment.

Best Practices for Data Annotation

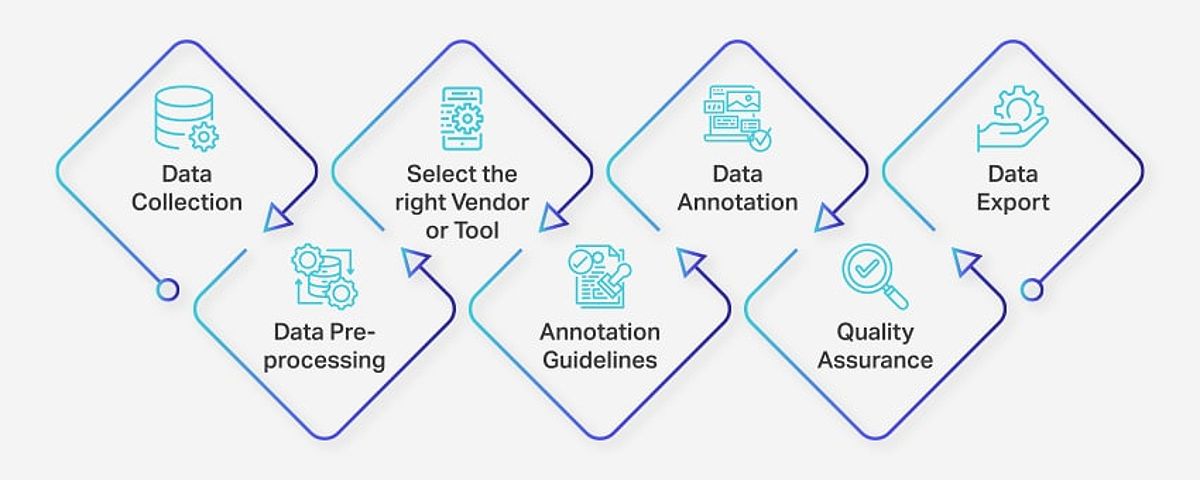

Three key steps in data annotation and data labeling projects

Three key steps in data annotation and data labeling projects

To improve data annotation practices, several best practices should be followed. Clear and unambiguous annotation guidelines are essential for ensuring consistency. Quality control and validation processes, such as double-checks, consensus models, and rigorous quality checks, help maintain the accuracy of annotated data. An iterative approach, starting small and gradually scaling up, allows for constant evaluation and improvement of model performance. Collaboration and communication between data scientists, annotators, and stakeholders are also important for successful data annotation.

Leveraging Outsourced Data Annotation Services

Outsourced data annotation services can provide valuable expertise and resources. These specialized providers offer access to trained annotators, advanced annotation tools, and streamlined quality control processes. When considering an outsourced partner, it is important to research their track record in delivering accurate and consistent results. They should also be able to handle the specific data types and requirements of the project. Data privacy and security protocols should be in place to ensure the protection of sensitive information.

FAQ

What are some of the most popular data annotation tools available today?

Some of the top data annotation tools on the market include Labelbox, Playment, Roboflow, Superannotate, and Hive. These platforms offer a wide range of annotation capabilities, from image and video to text and audio, along with robust quality control features.

How can I assess the quality of annotated data?

Evaluating the quality of annotated data can be done through techniques like inter-annotator agreement, where multiple annotators label the same data and their results are compared. Using gold standard datasets, where a highly accurate reference set is established, can also help validate the quality of your annotations. Additionally, implementing quality checks and consensus models can identify and address any inconsistencies or errors in the annotated data.

What are the ethical considerations involved in data annotation?

When it comes to data annotation, there are a few key ethical considerations to keep in mind. First and foremost, ensuring data privacy and security is crucial, especially when dealing with sensitive information. Additionally, mitigating bias in the annotated data is essential to prevent the amplification of these issues in our machine learning models. Establishing clear guidelines for the appropriate use of annotated data is also important to ensure responsible and ethical practices.

Conclusion: Unlock the Power of Annotated Data

There you have it, my fellow data scientists – the essential tech assessment answers you need to navigate the ever-evolving world of data annotation. From understanding the landscape to evaluating the tools, addressing the challenges, and implementing best practices, I’ve got you covered.

But remember, the journey doesn’t end here. As the machine learning and AI landscape continues to evolve, staying on top of the latest trends and best practices is crucial. Embrace the power of collaboration, keep your finger on the pulse of the industry, and never stop learning. Because when it comes to data annotation, the sky’s the limit, my friends.

So, what are you waiting for? It’s time to dive in, put these insights to work, and unlock the full potential of your annotated data. The future is ours for the taking, and I can’t wait to see what we accomplish together.